Rather than making us safer, so-called security back doors will increase the vulnerability of digital systems.

Software, networking, and other technology providers are beginning to see stronger system security measures as a real benefit to their users. In fact, some companies, like Apple, Google, and Yahoo, are aiming to provide such strong security on user data that no one but the user can ever access the user’s information. Law enforcement agencies in the United States have reacted negatively to plans for producing such strong security, insisting that companies must at least provide “back doors” to law enforcement to access user information. Law enforcement specifically wants to require companies to build their products’ encryption and other security systems so that companies could “unlock” the data for law enforcement by using, as one editorial board unfortunately put it, a “secure golden key they would retain and use only when a court has approved a search warrant.”

The problem with the “golden key” approach is that it just doesn’t work. While a golden key that unlocks data only for legally authorized surveillance might sound like an ideal solution (assuming you trust the government not to abuse it), technology companies and their experts don’t actually know how to provide this functionality in practice. Security engineers, cryptographers, and computer scientists are in almost universal agreement that any technology that provides the government with a back door also carries a significant risk of weakening security in unexpected ways. In other words, a back door for the government can easily – and quietly – become a back door for criminals and foreign intelligence services.

The problem is chiefly one of engineering and complexity. Although a government back door might sound like a conceptually simple problem, security systems (especially those involving cryptography) are actually incredibly complex. As complexity increases – and a back door definitely adds additional complexity – the vulnerability of information systems becomes exponentially worse. Indeed, the cardinal rule of cryptography and security is widely known: as much as possible, keep systems simple and well-understood – by both engineers as well as average users.

Yet this rule is not a perfect safeguard. Even relatively simple systems that have been deemed highly secure frequently turn out to have subtle flaws that can be exploited in surprising – and often catastrophic – ways. And that is without adding the significant additional complexity introduced by a back door for law enforcement. The history of cryptography and security is littered with examples of systems that eventually fell prey, even after being fielded for years, to subtle design and implementation flaws initially undetected by their designers.

Yet this rule is not a perfect safeguard. Even relatively simple systems that have been deemed highly secure frequently turn out to have subtle flaws that can be exploited in surprising – and often catastrophic – ways. And that is without adding the significant additional complexity introduced by a back door for law enforcement. The history of cryptography and security is littered with examples of systems that eventually fell prey, even after being fielded for years, to subtle design and implementation flaws initially undetected by their designers.

Worse still, a back door adds exactly the kind of complexity that is likely to introduce new flaws. A back door increases the “attack surface” of the system, providing new points of leverage that a nefarious attacker can exploit. Thus, adding complexity requiring a back door amounts to creating systems with built-in flaws. As Apple, Google, and other similarly situated companies point out, why would customers pay for and use systems that make their information more vulnerable to attack?

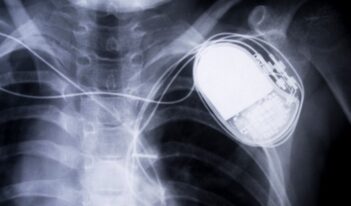

Companies are now awakening to the fact that, in a post-Snowden world, customers are becoming savvier about security and will choose between products on this basis. If companies like Apple, Google, Microsoft, and Cisco (just to name a few) are somehow forced to include governmentally-mandated flaws in their products, these flawed systems will become part of our national critical infrastructure and the stakes will become a lot higher than hacked cell phone photos, address books, or emails. Recent security breaches at Target, Home Depot, or even at Sony Corporation, possibly in connection with its release of the movie The Interview, are only a few recent examples of the scope of damage possible with the added potential vulnerabilities that would be introduced by back doors.

Companies are now awakening to the fact that, in a post-Snowden world, customers are becoming savvier about security and will choose between products on this basis. If companies like Apple, Google, Microsoft, and Cisco (just to name a few) are somehow forced to include governmentally-mandated flaws in their products, these flawed systems will become part of our national critical infrastructure and the stakes will become a lot higher than hacked cell phone photos, address books, or emails. Recent security breaches at Target, Home Depot, or even at Sony Corporation, possibly in connection with its release of the movie The Interview, are only a few recent examples of the scope of damage possible with the added potential vulnerabilities that would be introduced by back doors.

Recognizing that mandatory back doors would require what are essentially security flaws calls to mind a debate widely aired in the early 1990s. At that time, expert and policy debate over these issues successfully prevented government proposals like private key escrow, such as the Clipper Chip. Those of us who argued against these earlier back doors to crypto systems did not assert that design transparency was the heart of the problem; rather, the problem lay with the introduction of flaws.

No cryptographer in her right mind today would consider designing a system based on “security through obscurity.” The problem ultimately is with the door itself – front or back. If we design systems with lawful intercept mechanisms built in, we have introduced complexity into systems and have made them inherently less secure in the process. This is true even for systems with designs that are open for all to see and inspect.

No cryptographer in her right mind today would consider designing a system based on “security through obscurity.” The problem ultimately is with the door itself – front or back. If we design systems with lawful intercept mechanisms built in, we have introduced complexity into systems and have made them inherently less secure in the process. This is true even for systems with designs that are open for all to see and inspect.

What is especially disturbing about law enforcement’s recent calls for security back doors lies in the false sense of security it will likely instill in those not familiar with the technological issues at stake – which is practically everyone. Even FBI Director Jim Comey himself has repeatedly admitted that he does not fully understand the technology behind digital security systems, an admission that is somewhat troubling since it comes from an FBI Director who is asking companies to alter these very systems.

Furthermore, Comey agreed that he is not interested in reviving the “key escrow” arguments of the first crypto wars in the 1990s, where government would have access to users’ private crypto keys held in escrow; rather, he said he is speaking now more “thematically” about the security problem. If this is the case, however, it is hard to see just what law enforcement has in mind short of a “golden key” created by the “wizards” working at Apple and Google.

Furthermore, Comey agreed that he is not interested in reviving the “key escrow” arguments of the first crypto wars in the 1990s, where government would have access to users’ private crypto keys held in escrow; rather, he said he is speaking now more “thematically” about the security problem. If this is the case, however, it is hard to see just what law enforcement has in mind short of a “golden key” created by the “wizards” working at Apple and Google.

Although I have no doubt that some of the smartest people in the world of software reside within companies like Apple, Google, and Yahoo and that they can incorporate many amazing things into their products, I think it is misleading and unproductive for law enforcement agencies to confuse the general public with meaningless distinctions and “magic” solutions – especially ones that will inherently make information systems more vulnerable to attack. We need a national conversation about security and privacy in the Internet age, but we must all do our best to ensure that this conversation is well informed.

This post draws on remarks delivered by Mr. Vagle at a recent seminar held at the University of Pennsylvania and jointly sponsored by the Penn Program on Regulation and the Wharton School’s Risk Management and Decision Processes Center. Joining in the seminar were Matthew Blaze, Associate Professor of Computer and Information Science at Penn’s School of Engineering and Applied Science, and Howard Kunreuther, the James G. Dinan Professor and Co-Director of the Risk Center at the Wharton School.